We are still working on this function.

Linear Regression: Every AI/ML Engineer’s First Step

In every AI/ML engineer’s path there’s a 95% possibility they’ve learned linear…

January 13, 2025

In every AI/ML engineer’s path there’s a 95% possibility they’ve learned linear…

Have you ever wondered: What is a computer? What is computing? How…

Artificial Intelligence (AI) has become a transformative force across industries, boosting efficiency…

Artificial Intelligence (AI) has become a transformative force across industries, boosting efficiency and simplifying tasks. From generating text in seconds to recognizing images, AI is reshaping our world. But how do machines achieve this level of intelligence? How do computers “think”?

A few years ago, computers couldn’t write articles for us without human assistance. Today, they can generate high-quality content in seconds. What drove this remarkable leap in capability?

The answer lies in neural networks—the fundamental AI technology powering numerous cutting-edge applications.

A neural network is a computational system inspired by the human brain’s ability to process information. While it mimics certain structural and functional elements of the brain, it’s important to note that artificial neural networks (ANNs) are a simplified model and do not replicate the brain’s complexity. Despite this, they allow machines to learn from data and make decisions autonomously.

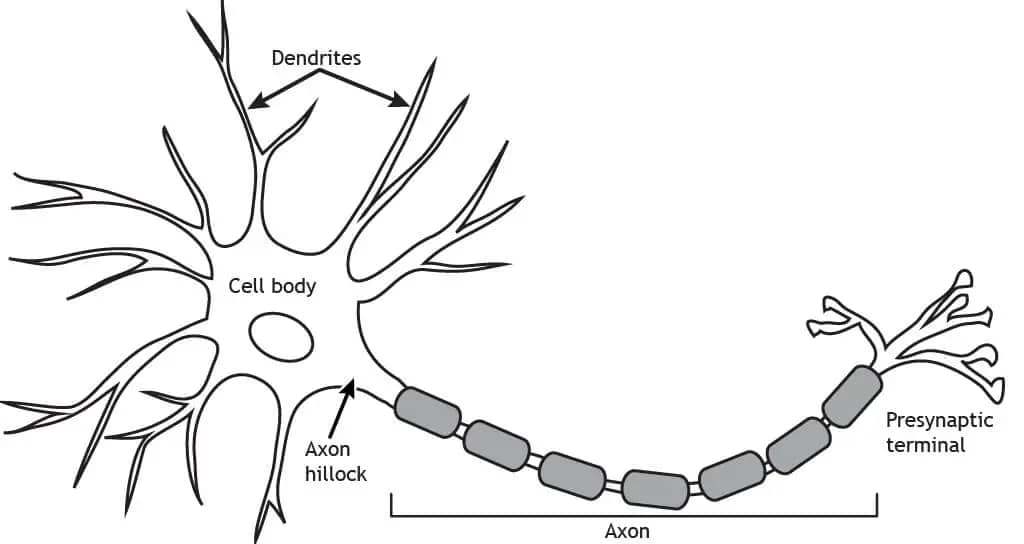

To understand artificial neural networks, we first need to grasp how the human brain processes information.

The brain consists of billions of neurons, specialized cells that receive and transmit information. These neurons form an interconnected network, collaborating to control every function in our body. Neurons communicate using both electrical and chemical signals, a process that is far more intricate than the simplified “firing” model typically used in neural networks.

A neuron has three key components:

Neurons decide whether to “activate” based on the input they receive. This activation, or “firing,” is the result of complex electrical and chemical processes. If the neuron is sufficiently triggered, it sends a signal to the next neuron in the network.

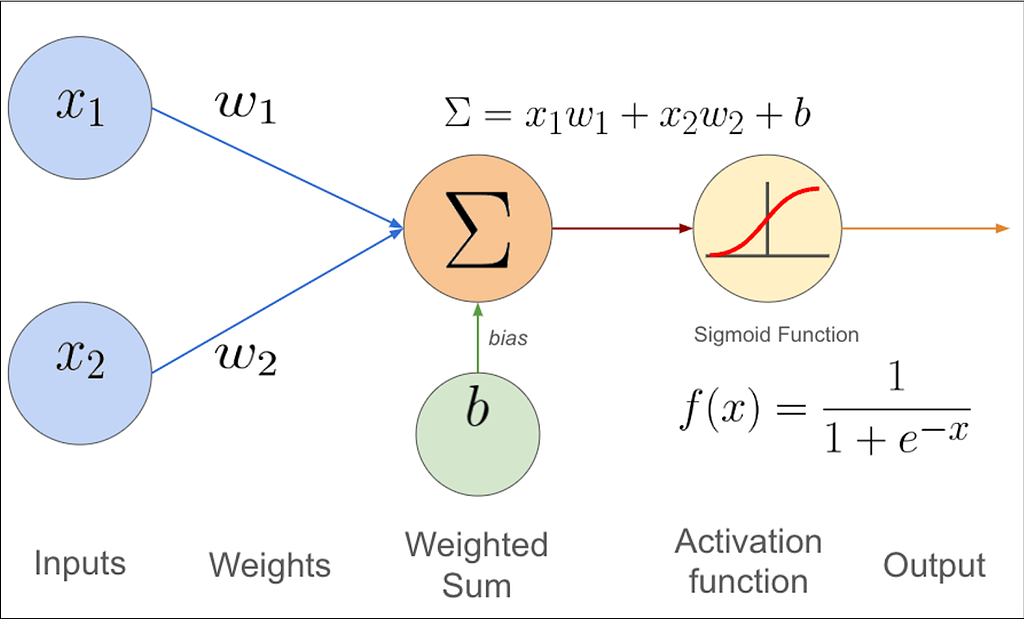

An artificial neural network is a mathematical representation of the human brain’s network of neurons. The basic unit of an ANN is a perceptron (or artificial neuron). While the perceptron is a simple model, modern neural networks involve multiple layers of neurons working together to solve more complex tasks.

Here’s how a perceptron works:

Common activation functions include:

Example: If a perceptron has two inputs:

The perceptron computes: Output = ActivationFunction((x1⋅w1) + (x2⋅w2)) = ActivationFunction((0.9⋅0.2) + (0.7⋅0.9)) = ActivationFunction(0.81)

If the activation threshold is 0.75, the perceptron will fire, outputting 1.

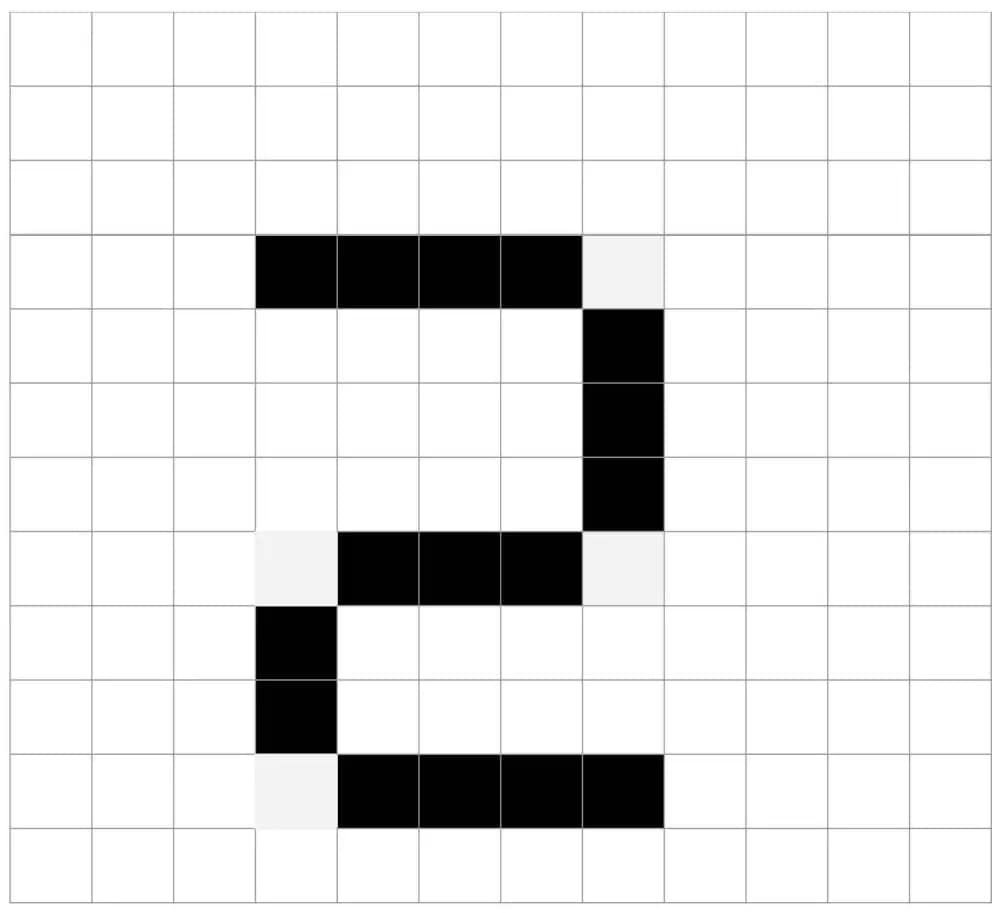

Let’s dive into a practical example of how neural networks work—recognizing handwritten numbers. Imagine a 12×12 grid representing a grayscale image of the number “2,” where each grid square corresponds to a pixel with a grayscale value between 0 (black) and 1 (white).

In real-world applications, networks can have multiple layers of perceptrons, each layer detecting increasingly complex patterns.

To effectively work, neural networks must be trained using large datasets. Training involves:

With time, the network becomes more accurate in predicting outcomes. Networks with more layers, known as deep learning models, can perform even more complex tasks.

While perceptrons form the foundation of neural networks, there are several other types of networks designed to handle more complex tasks:

Each of these architectures has unique advantages depending on the problem at hand.

Neural networks power many of today’s AI applications, including:

Advanced neural network architectures, such as Convolutional Neural Networks (CNNs) for image recognition and Recurrent Neural Networks (RNNs) for sequence-based data, are at the heart of these innovations.

Neural networks are the driving force behind modern AI innovations, enabling machines to learn from data and perform tasks that were once considered impossible. While this article covered a simplified version of the concept, there’s much more to explore—such as advanced training algorithms, deep learning techniques, and sophisticated neural network architectures.

Stay tuned for an upcoming comprehensive deep dive into neural networks in our article series, where we’ll explore the topic in greater detail. This is just the beginning of understanding how neural networks power the future of artificial intelligence!

1 Comments On “What Are Neural Networks? The Key to Artificial Intelligence (AI) Innovation”

Nice work